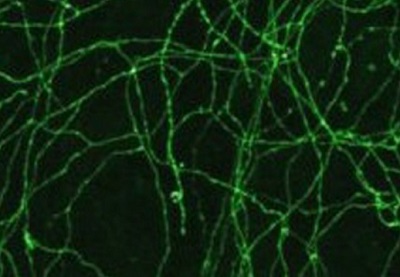

by Angela Guess

Larry Hardesty reports in MIT News, “In recent years, some of the most exciting advances in artificial intelligence have come courtesy of convolutional neural networks, large virtual networks of simple information-processing units, which are loosely modeled on the anatomy of the human brain. Neural networks are typically implemented using graphics processing units (GPUs), special-purpose graphics chips found in all computing devices with screens. A mobile GPU, of the type found in a cell phone, might have almost 200 cores, or processing units, making it well suited to simulating a network of distributed processors.”

Hardesty goes on, “At the International Solid State Circuits Conference in San Francisco this week, MIT researchers presented a new chip designed specifically to implement neural networks. It is 10 times as efficient as a mobile GPU, so it could enable mobile devices to run powerful artificial-intelligence algorithms locally, rather than uploading data to the Internet for processing. Neural nets were widely studied in the early days of artificial-intelligence research, but by the 1970s, they’d fallen out of favor. In the past decade, however, they’ve enjoyed a revival, under the name ‘deep learning’.”

photo credit: Flickr/ MikeBlogs