Part of what makes data such a challenging field to work in is the processes that surround it. Working with data can be relatively straightforward if done with focus and planning. Countless tools, apps, and databases are ready and waiting to help companies make the most of their data. But the practices that govern those tools – that ensure the resultant information is put to good use – those practices can be quite tricky. DevOps is no exception.

Part of what makes data such a challenging field to work in is the processes that surround it. Working with data can be relatively straightforward if done with focus and planning. Countless tools, apps, and databases are ready and waiting to help companies make the most of their data. But the practices that govern those tools – that ensure the resultant information is put to good use – those practices can be quite tricky. DevOps is no exception.

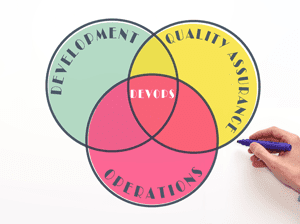

DevOps is defined by Gartner as “a change in IT culture, focusing on rapid IT service delivery through the adoption of Agile, Lean practices in the context of a system-oriented approach. DevOps emphasizes people (and culture), and seeks to improve collaboration between operations and development teams.” DevOps relies on technology, but also on culture, people, and process. When done well, DevOps can completely transform a business. According to Stephanie Stouck of Puppet, high performing IT organizations deploy 200 times more frequently than low performing organizations. They also spend 50% less time remediating security issues and 22% less time on unplanned work.

DATAVERSITY® recently spoke with Shashi Kiran, CMO of Quali to learn more about the key barriers to DevOps success and how to overcome those barriers. Quali is a leading provider of Cloud Sandboxes for automating the DevOps lifecycle. The company recently completed its annual DevOps survey, the results of which are summarized in the below infographic. Mr. Kiran was the head of worldwide marketing at Cisco before joining the Quali team in June, 2016.

Photo Credit: Quali

An edited version of DATAVERSITY’s conversation with Mr. Kiran follows.

DATAVERSITY (DV): What does DevOps look like when it’s functioning at its best? In other words, what is the ideal DevOps scenario?

Shashi Kiran: If we think of DevOps as a process, the best process is one that is entirely invisible. When properly assimilated within the organization, it would increase the velocity of innovation and release quality and collaboration between the Dev, Test, Sec, and Ops teams. Given the areas of impact that DevOps can have in an organization, it is perhaps more appropriate to think of it as a lifecycle, rather than as a point solution or a tool.

DV: Chris Cancialosi of Forbes noted that one of the major challenges of DevOps is that it is not entirely a matter of technology. Your survey found that company culture is in fact the top barrier to successful DevOps.

Shashi Kiran: As much as DevOps is technology centric, it is also people and process centric. Together these come together to form the culture. It is therefore no surprise for us that culture surfaced as the top barrier to DevOps adoption in Quali’s 2016 Annual DevOps survey.

Any change in culture needs to be driven by the people and for the people. Change needs to therefore begin at the top, with the leadership and management teams understanding the potential and pitfalls of DevOps. We are seeing CIOs getting involved in DevOps conversations and equating the impact it has on productivity, efficiency, and collaboration – all of which are measurable goals. Organizational champions need to be nominated and held accountable to permeate this change across the organization. Proper incentives need to be aligned and evaluation mechanisms also need to take a periodic check to monitor progress. Finally, knowledge sharing and best practices need to be brought in across the entire organization, so there is a collective diffusion and shared accountability. DevOps will not succeed at scale without these steps. The operative word here is “scale.”

DV: The second biggest barrier to DevOps that you found was test automation. Can you explain why this is the case and what companies can do to address the problem?

Shashi Kiran: Several aspects of software development and deployment are embracing automation practices. Today we see continuous integration and delivery (CI/CD) as a key initiative in terms of DevOps and release engineering. While DevOps has placed a lot of emphasis on Ops and certainly some aspects of software development, other aspects of software development activity have lagged behind.

Testing often gets subsumed under development. While this works for simple unit testing, the testing of complex and hybrid environments is an activity unto itself. As application complexity increases, the traditional test methods and even simple automation do not suffice. We’re now changing the taxonomy to proactively bring in the usage of dev/test teams instead of just dev teams.

In the context of CI/CD, we often see that in a bid to move faster, holistic access to complex production environments is not offered up early in the test cycle, nor is this process automated. Both of these problems cause issues in terms of quality as well as standardization and scale. It is well known that it is less expensive to catch and rectify bugs early in the dev/test cycle. This is one of the reasons why test automation, or rather “continuous testing,” has been flagged as an issue.

More efforts are being made to educate in this direction. We’re actually doing our part to educate and promote awareness with a webinar on Continuous Testing – The ‘Missing Link’ in DevOps and CI/CD with our partner Trace3. We also allow on-demand, self-service access to dynamic environments and make that available to dev/test teams. This bring standardization and increases quality while reducing risk.

DV: Your survey found that DevOps managers are deploying multiple tools to support DevOps efforts, and that this leads to a fragmented toolchain. Can you explain why using multiple tools is a problem?

Shashi Kiran: Much of what I am discussing here relates to standardization and scale. If it’s a one-off project, it does not matter what tool is being used. But when the entire organization needs to come together and move towards common goals with predictability, standardization is key. It is here that tool fragmentation can become a significant overhead as each tool, despite serving a similar end-goal, can adopt means that are different. This means the learning curve, best practices, deployment principles, features, bugs, documentation, licensing, and community linkages are all different.

This “tool spaghetti” becomes a problem in larger, distributed enterprises, and rationalization becomes a significant overhead when there’s an attempt to bring them under the common practice. Very often these decisions are made in a decentralized way to solve certain problems, until such a time that it becomes an organizational overhead.

At that time, attempts are made to bring uniformity and standardization in an effort to make the organization and practices more consistent. Doing this proactively upfront and bringing packaged solutions that are tool agnostic can go a long way to minimize disruption later, allowing organizations to stay nimble and adapt quickly.

DV: Can you talk about some of the issues that arise when a hybrid cloud environment comes face-to-face with DevOps? How can these issues be addressed?

Shashi Kiran: Today we see that almost 70% of enterprises want to adopt hybrid clouds. Why not go all-in on public cloud? Well, the reasons are several. The notable reasons are legacy investments made into on-premise data centers, or opting for private clouds for reasons of ownership, cost, or compliance.

At the same time, most enterprises want the benefit of the agility and on-demand scale that public clouds offer. The challenge for hybrid clouds, however, is that on-premise and private cloud environments differ from public cloud environments. Further, each public cloud vendor has a different set of tools and templates, many of which are custom to that particular vendor. It is easy to go into a public cloud, but hard to get out. Applications need to be architected to perform well on two different types of dissimilar clouds, and it can be quite a challenge.

Quali’s 2016 survey pointed out that less than 25% of applications are suitable or ready to run in a hybrid cloud. This is a challenge. When a hybrid cloud approach comes face-to-face with DevOps, this complexity is further amplified. DevOps tools and practices aren’t entirely cloud agnostic. Further, adopting certain cloud native toolsets from one cloud versus another — along with the customization that entails — simply magnifies both cost and complexity.

While all these issues are surmountable, they do get in the way of agility, consistency, etc., adding complexity and risk to the stack. These issues require standardization of fundamental processes. They also benefit from adopting sandbox environments that are replicative of the hybrid cloud environments and can be exposed to developers and testers early in the cycle. Having access to these blueprints can increase deployment velocity and quality of code while reducing costs and risk.

…

Stephanie Stouck of Puppet wrote, “In my view, becoming a DevOps practitioner requires a certain state of mind. You’ve got to have an attitude which says, ‘I’m going to make a difference. I’m going to understand that in the business of delivering great software, we’re all in it together’.” The key takeaway from Quali’s annual DevOps survey seems to be that great DevOps is a team effort, not just within divisions but across the entire business. As such, it requires buy-in at the highest levels as soon as possible. With a culture that respects the DevOps lifecycle, time and manpower can be put to better use, output can increase, and everyone involved can go home happier at the end of each day.

Photo Credit: astephan/Shutterstock.com