Click to learn more about video blogger Thomas Frisendal.

When I googled “state of the art” “data modelling” (European spelling), I got:

- An Overview of State-of-the-Art Data Modelling – Events – ACSE – The

- An Overview of State-of-the-Art Data Modelling

- Data Modelling: Introduction and State of the Art – Springer

- Type Systems: Introduction and State of the Art

- An Overview of State-of- the-Art Data Modelling Introduction

- State Of The Art Analysis – INSPIRE Directive – Europa.eu

Actually numbers 1, 2, and 5 all refer to the same training given by the University of Sheffield on subjects such as: Curve fitting; Regression; Classification; Supervised and unsupervised learning; Linear models; Polynomials; Radial basis functions; and much more. Hardly my kind of Data Modeling.

The Springer book title looks promising. It is written by two academics from the University of Glasgow and describes a project called FIDE, part of ESPRIT from the 90s, focused on Data Modelling for persistent programming languages such as TYCOON and others.

Hmm, indeed. Where has plain old State of the Art Data Modelling gone? If you google “best practise” “data modelling” (European spelling again) you get some articles about Data Modeling in Qlik, Azure, Cassandra and MongoDB. Also, not quite what I am looking for.

So, let’s roll up our sleeves and do it ourselves. We need to re-discover purpose, representation, and process and we need to define some recommended best practices in the new world of hybrid data.

The Purpose of Data Modeling

People use the term “Data Modeling” in so many different contexts that a common definition seems almost impossible. How to unite ontologies, taxonomies, conceptual models, concept models, entity relationship models, object models, relational models, multi-dimensional models, graph models, NoSQL physical models, knowledge graphs, Artificial Intelligence (AI), and statistical models into one all-embracing concept?

I think the modern answer to that is context. Context is so important in all matters of business, research, and computing that it really should get our full attention. Let me underline that with a little every-day example:

Amazon likes me, I am a frequent buyer of books, and Amazon would like me to buy more. So, they send me machine-generated recommendations:

This is likely to be generated by AI. But the I is questionable. Actually, I wrote the book in the middle! What is going wrong here? The answer clearly is: Context. Amazon’s recommendation system does not delve into whether the recipient is an author of a book that it recommends. I am pretty certain that Amazon has a good knowledge graph, which knows this fact. But why does Amazon not consider this context in this application?

Context is at the heart of almost all cognitive processes, be they automated/computed or exercised by humans. We need context descriptions, and the need for them is not going to go away. Context description is what data models are for. Maybe we should start calling, what we do, “Context Modeling”?

At this years’ GraphConnect Europe user conference Neo4J’s CEO and founder Emil Eifrem gave a very interesting keynote. One of his statements is that “Context drives meaning and precision for machine-learning systems”:

(Photo by the author)

And he added: And context is graphs! He is so right – much of what we see today in automated AI-based computing is driven by knowledge graphs. Knowledge graphs contain structure, meaning, and data in as a graph data model. This is the space where semantic technologies (RDF and ontologies) traditionally have been the preferred technologies, but today the scope is wider than that.

At Enterprise Data World 2017 Conference in Atlanta, Mike Bowers of the LDS Church gave an interesting talk about these matters. His take is that for his organization the right platform should support multiple styles: documents (for storing data), semantics like RDF for storing meaning, and graphs for relationships. MongoDB and MarkLogic are examples of DBMS vendors, who offer all of that in one package. This brings us to the next topic, which is about the different kinds of models.

Representation of Data Models

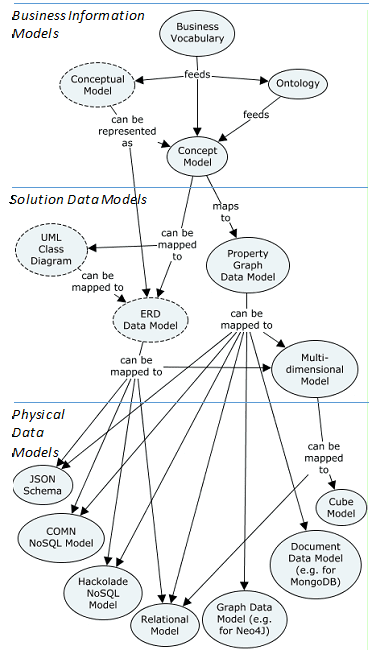

Data Models are many things to many people. They fall into three categories depending on their application:

- Business Level Information Models: Which represent the “real”, human world and are very close to nouns and verbs – from business vocabularies over ontologies (RDF-based etc.) to concept models – also comprise the “conceptual model”, which never really made it to the hall of fame

- Solution Level Information Models: Which represent designed subsets of the real world intended for being used to describe the context of very specific application(s), includes UML class diagrams (not used that much for data modeling anymore), ERD data models (“ERWin land”), property graph models and multidimensional models.

- Physical Level Data Models: With a hatful of varieties and styles – going all the way from JSON and SQL to documents, graphs, and multidimensional cubes with just a few approaches towards standardization (Ted Hill’s COMN notation and the Hackolade NoSQL Data Modeling tool).

In the concept map below I have tried to give an overview of the Data Modeling landscape:

(Diagram produced using CmapCloud, https://cmapcloud.ihmc.us )

I have, very impolitely, retired the styles marked with dashed outlines. The reality is that classic ERD models are not being used as prominently as before, and many people are busily going agile (more about that later).

In short, I think that from a representational point of view, there are state of the art solutions at the business level: Business vocabularies, ontologies (semantics), and concept models all aiming at representing structure and meaning. On the solution level there are the multidimensional models, which still are attractive in analytical environments. The graph based data models are quickly taking over the general computing Data Modeling space. Actually, property graph data models can span a very wide specter of solutions and map elegantly to almost all kinds of data stores in use today.

When it comes to the physical level, there are a lot of proprietary things going on (from many DBMS vendors and just a a few tool vendors). I think that COMN and Hackolade are going to be successful. I do not need to say that for property graphs, because they are already highly successful, both for graph style solutions and as knowledge graphs used by Google and many other large IT companies. In my opinion property graphs are the way to go for most kinds of solutions (including solutions which end up being supported partly or in full by relational databases).

How do we go about producing those models in the post-modern world?

The Process of Data Modeling

It is a fact of life that the state of Data Modeling efforts could be a lot better. Jim Duggan (retired Gartner analyst in the Data Modeling space) says (in a private communication) that he:

“Hasn’t seen any formal end-to-end modeling for years. Some is still done in government practice, but most agile approaches start with definition of workflow and objects, and then have the DM team accommodate.”

I agree. The exception being that physical modeling is quite healthy. In the Data Warehouse space, where I have spent the last 20 years or so, top-down, up-front Data Modeling survived longer than in the application development space. And the Enterprise Data Model also did not make it to the hall of fame. State of the art of the data modeling process is being re-negotiated.

The catalyst for the last 10 years of evolution has been short delivery times:

- Agile methodologies with their release trains and so forth

- Self-service BI evolving into smart data discovery and agile data preparation

However, the lessons learned from the knowledge graph paradigm shows us that understanding the structure and meaning of the context is of great importance. Gaining an understanding must be done on the business level using that vocabulary. This takes time, but as Emil Eifrem pointed out, it is a matter of quality and precision.

By the way: There are some interesting challenges in better integration with what the Data Scientists also call models.

What we are seeing is two sets of requirements working against one another:

And the holy grail in the middle is the machine-assistance (AI-based) using “Smart Data Discovery”. That is the game-changer, and it is still in the leading (not bleading) edge category. It is here to stay, and I have delved into it in a blog post here on DATAVERSITY® from February of this year.

Conclusion

The best way forward is to maintain business value from a solid understanding of the contexts of the business processes. However, there is also considerable business value in being agile and deliver with speed. We need to handle the “schema last” approach in a controlled (curated) manner. Machine-assisted modeling is the key to understanding the structure and meaning of a context as well as to ingesting schema’s from the data (“Smart Data Discovery”). Modeling still needs humans, who understand the business and are able to resolve inconsistencies, errors, lacks of precision etc. So, in a sense, Data Modeling is still going strong, only now working bottom-up using new powerful tools!