by Angela Guess

by Angela Guess

Aaron Krumins recently wrote in Extreme Tech, “One of the more disturbing trends in robotics is how often some researchers gloss over the moral complexities of AI by suggesting Isaac Asimov’s ‘Three Laws of Robotics‘ will be sufficient to handle any ambiguities robots encounter… There are encouraging signs, however, that at least some other researchers are taking the problem seriously. Two figures leading the charge in this direction are Mark Riedl and Brent Harrison of Georgia Institute of Technology. They are pioneering a system called Quixote, by which an artificial intelligence learns “value alignment” by reading stories from different cultures.”

Krumins goes on, “This may seem a strange approach to the problem — until one considers how regional questions of morality are. For example, two counties only a few miles apart in the United States may have vastly different ways of perceiving moral and ethical issues. For instance, spanking your child may be considered a morally unacceptable act among an affluent urban population, whereas immigrants or more rural communities may find this form of punishment more acceptable. This is not to caste aspersions in either direction, but simply to point out that even among humans, there is little consensus on moral and ethical issues. How much more difficult will it be for humans to agree on what constitutes ethical robot behavior?”

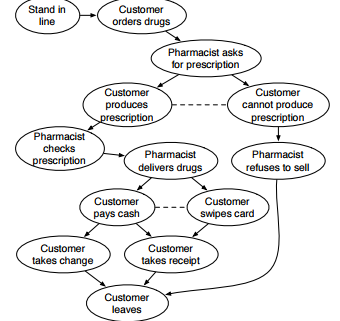

photo credit: Quixote