Click to learn more about co- author Ion Stoica.

Click to learn more about co- author Ben Lorica.

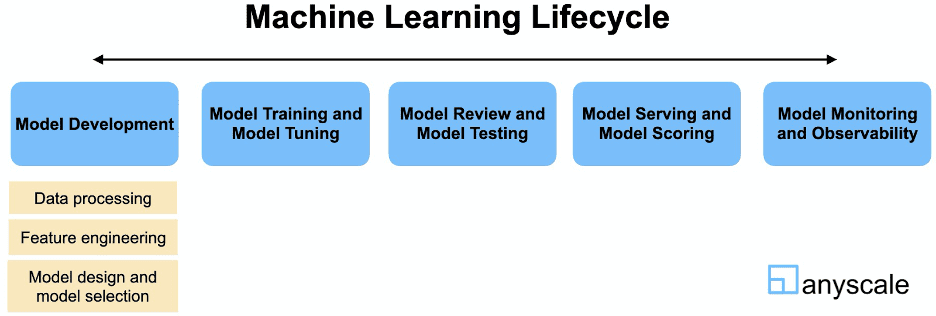

Machine learning is being embedded in applications that involve many data types and data sources. This means that software developers from different backgrounds need to work on projects that involve ML. In our previous post, we listed key features that machine learning platforms need to have in order to meet current and future workloads. We also described MLOps, a set of practices focused on productionizing the machine learning lifecycle.

In this post, we focus on model servers, software at the heart of machine learning services that operate in real-time or offline. There are two common approaches used for serving machine learning models. The first approach embeds model evaluation in a web server (e.g., Flask) as an API service endpoint dedicated to a prediction service.

The second approach offloads model evaluation to a separate service. This is an active area for startups, and there are a growing number of options that fall into this category. Offerings include services from cloud providers (SageMaker, Azure, Google Cloud), open source projects for model serving (Ray Serve, Seldon, TorchServe, TensorFlow Serving, etc.), proprietary software (SAS, Datatron, ModelOps, etc.), and bespoke solutions usually written in some generic framework.

While machine learning can be used for one-off projects, most developers seek to embed machine learning across their products and services. Model servers are important components of software infrastructure for productionizing machine learning, and, as such, companies need to carefully evaluate their options. This post focuses on key features companies should look for in a model server.

Support for Popular Toolkits

Your model server is probably separate from your model training system. Choose a model server that is able to use a trained model artifact produced using a number of popular tools. Developers and machine learning engineers build models using many different libraries, including ones for deep learning (PyTorch, TensorFlow), machine learning, and statistics (scikit-learn, XGBoost, SAS, statsmodels). Model builders also continue to use a variety of programming languages. While Python has emerged as the dominant language for machine learning, other languages like R, Java, Scala, Julia, and SAS still have many users as well. More recently, many companies have implemented Data Science workbenches like Databricks, Cloudera, Dataiku, Domino Data Lab, and others.

A GUI for Model Deployment and More

Developers may use a command-line interface, but enterprise users will want a graphical user interface that guides them through the process of deploying models and highlights the different stages of the machine learning lifecycle. As the deployment processes mature, they might migrate more to scripting and automation. Model servers with user interfaces include Seldon Deploy, SAS Model Manager, Datatron, and others that target enterprise users.

Easy to Operate and Deploy but with High Performance and Scalability

As machine learning gets embedded in critical applications, companies will need low-latency model servers that can power large-scale prediction services. Companies like Facebook and Google have machine learning services that provide real-time responses billions of times each day. While these might be extreme cases, many companies also deploy applications like recommendation and personalization systems that interact with many users on a daily basis. With the availability of open source software, companies now have access to low-latency model servers that can scale to many machines.

Most model servers use a microservice architecture and are accessible through a REST or gRPC API. This makes it easier to integrate machine learning (“recommender”) with other services (“shopping cart”). Depending on your setup, you may want a model server that lets you deploy models on the cloud, on-premise, or both. Your model server has to participate in infrastructure features like auto-scaling, resource management, and hardware provisioning.

Some model servers added recent innovations that reduce complexity, boost performance, and provide flexible options for integrating with other services. With the introduction of a new Tensor data type, RedisAI supports data locality — a feature that enables users to get and set Tensors from their favorite client and “run their AI model where their data lives.” Ray Serve brings model evaluation logic closer to business logic by giving developers end-to-end control from API endpoint to model evaluation and back to the API endpoint. In addition, it is easy to operate and as easy to deploy as a simple web server.

Includes Tools for Testing, Deployment, and Rollouts

Once a model is trained, it has to be reviewed and tested before it gets deployed. Seldon Deploy, Datatron, and other model servers have some interesting capabilities that let you test models with a single prediction or using a load test. To facilitate error identification and testing, these model servers also let you upload test data and visualize test predictions.

Once your model has been reviewed and tested, your model server should give you the ability to safely promote and demote models. Other popular rollout patterns include:

- Canary: A small part of requests are sent to the new model, while the bulk of requests get routed to an existing model.

- Shadowing: Production traffic is copied to a non-production service to test the model before running it in production.

Ideally, rollout tools are completely automatable, so your deployment tools can be plugged into your CI/CD or MLOps process.

Support for Complex Deployment Patterns

As you increase your usage of machine learning, your model server should be able to support many models in production. Your model server should also support complex deployment patterns that involve deploying more than one model at a time. It should support a variety of patterns, including:

- A/B Tests: A fraction of predictions use one model, and the rest go to another model.

- Ensembles: Multiple models are combined to form a more powerful predictive model.

- Cascade: If a baseline model produces a prediction with low confidence, traffic is routed to an alternative model. Another use case is refinement: Detect whether there is a car in the picture, and, if there is one, send the picture to a model that reads the car’s license plate.

- Multi-Arm Bandit: A form of reinforcement learning, bandits allocate traffic across several competing models.

Out of the Box Metrics and Monitoring

Machine learning models can degrade over time, and it’s important to have systems in place that indicate when models become less accurate or begin demonstrating bias and other unexpected behavior. Your model server should emit performance, usage, and other custom metrics that can be consumed by visualization and real-time monitoring tools. Some model servers are beginning to provide advanced capabilities, including anomaly detection and alerts. There are even startups (Superwise, Arize) that focus on using “machine learning to monitor machine learning.” While these are currently specialized tools that are separate from and need to be integrated with model servers, it’s quite likely that some model servers will build advanced monitoring and observability capabilities into their offerings.

Integrates with Model Management Tools

As you deploy more models to production, your model server will need to integrate with your model management tools. These tools come under many labels — access control, model catalog, model registry, model governance dashboard — but, in essence, they provide you with a 360-degree view of past and current models.

Because models will need to be periodically inspected, your model server should interface with services for auditing and reproducing models. Model versioning is now standard and comes with most of the model servers we examined. Datatron has a model governance dashboard that provides tools for auditing underperforming models. Many model servers have data lineage services that record when requests were sent and what the model inputs and outputs were. Debugging and auditing models also require a refined understanding of their key drivers. Seldon Deploy integrates with an open source tool for model inspection and explainability.

Unifies Batch and Online Scoring

Suppose you updated your model or that you were sent a large number of new records. In both of these examples, you may need to apply your model to a large dataset. You will need a model server that can score large datasets efficiently in mini-batches, as well as provide low-latency, online scoring (e.g., Ray Serve supports batch and online scoring).

Summary

As machine learning gets embedded in more software applications, companies need to select their model servers carefully. While Ray Serve is a relatively new open source model server, it already has many of the features we’ve listed in this post. It is a scalable, simple, and flexible tool for deploying, operating, and monitoring machine learning models. As we noted in our previous post, we believe that Ray and Ray Serve will be the foundations of many ML platforms in the future.