Key Takeaways

- Implementing AI at scale successfully requires strong AI governance and strategy.

- AI maturity assessments help identify critical deficiencies and actionable steps for AI readiness.

- An incremental, collaborative approach lays the foundation for successful AI governance.

Organizations across different sectors are under increasing pressure to implement AI programs for cost savings and operational efficiencies. However, these businesses face substantial roadblocks: data silos and a lack of AI governance processes.

Riham Elhabyan, senior advisor for AI and data policy at the Department of Fisheries and Oceans (DFO), recently shared her journey implementing Canada’s national AI strategy in her presentation at DATAVERSITY’s Data Governance & Information Quality (DGIQ) conference. She expanded on how structured governance approaches can transform AI experiments into production-ready solutions.

Canada’s Blueprint for AI Excellence

Canada’s AI strategy aims to enhance service delivery to its citizens while leveraging domestic AI expertise. For this vision to work, it requires guidance on adapting AI responsibly based on four clear priorities:

- Centralized AI Capacity: The Canadian government prioritizes the sharing of AI expertise and infrastructure support among different departments. It wants to break down silos and reallocate resources as needed.

- Modernized Policy and Governance: The pace of AI innovation demands that Canada modernize laws and create new frameworks, explains Elhabyan. These outcomes are necessary to keep pace with innovations and ensure ethical AI use.

- Increased AI Capabilities: To increase AI capabilities, Canada emphasizes talent and training in AI literacy. Each department needs to “identify learning battles, assess the current workforce AI capabilities, and educate them as needed,” says Elhabyan.

- Assurance of Public Trust: Elhabyan says that Canada implements accountability mechanisms to mitigate AI risks. Also, citizens need transparency and public engagement to show responsible AI use.

DFO needed a systematic approach to translate Canada’s AI strategy into meaningful actions and results. This implementation challenge led Elhabyan to focus on governance as a necessary bridge between strategy and operations.

What Is AI Governance?

Before proceeding, Elhabyan needed a clear definition of AI governance to guide DFO’s approach. After researching and thinking about other definitions, she identified the main components of AI governance as:

- A framework for responsible and ethical AI policies, tools, processes, and structures

- Clear data governance roles and responsibilities, specifying who should be accountable for what during the development cycle

- Assurance that AI is used is fairly, embedded with trust and transparency

- Comprehensive policies for mitigating risks and implementing AI frameworks

Elhabyan used this definition in DFO’s approach, as a foundation, to proactively reach the responsible and ethical AI envisioned in Canada’s strategy.

Why Does AI Governance Matter?

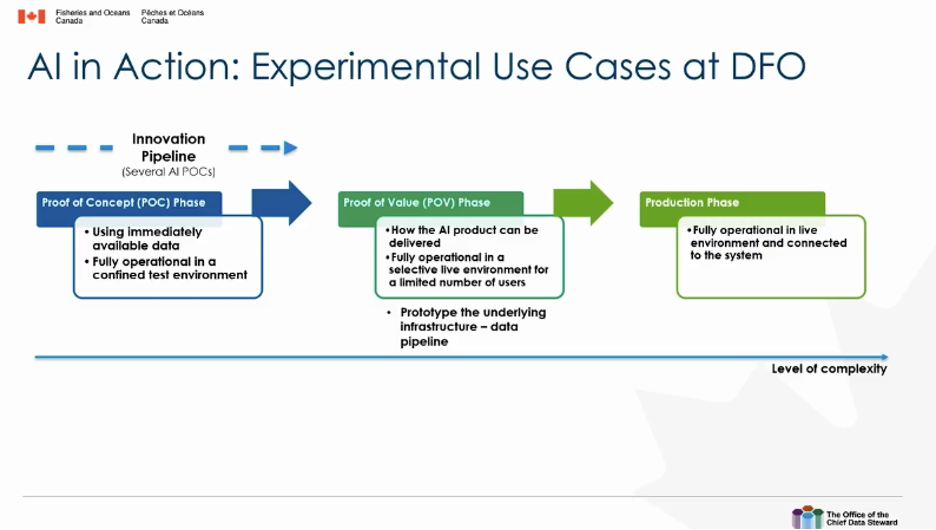

With governance definition in hand, Elhabyan and her team identified use cases and tested them out through an innovation pipeline. She wanted to have AI fully operational as soon as possible.

As shown in the diagram above, DFO ran several proof of concepts (POCs) at the same time, with small datasets run in test environments. Elhabyan said that an AI model trained in these test environments does not “have the same performance in a production solution. So that’s why we go through a field test or what we call proof of value (POV).”

The field tests executed in a selected live environment for a limited number of users. The POVs shed insights into the infrastructure and KPIs needs underlying the AI usage. Elhabyan and DFO got right to work running the pilots without hashing out all the AI governance details.

This research led AI use cases to succeed in the POC phase but fail in the POV and production phases. The DFO got this important feedback quickly. Consequently, the government of Canada saved wasted resources in scaling up the AI project, and refocused on the additional governance mechanisms needed to move the use cases along.

Gain the skills you need to succeed with flexible, expert-led training in data management – discover courses!

AI Maturity as the First Step to Value

Understanding what type of governance to add in support of AI use cases required knowing the current context, the milestones to reach, and what actions needed to happen next. Moreover, DFO needed alignment about the challenges it faced and the methodology.

So, Elhabyan turned to a maturity assessment. She initiated conversations with many stakeholder teams: the chief digital office, line of businesses, chief data stewards, IT, and senior managers. They were onboard with her messaging:

“If you want to really gain value of AI, put upfront investment into governance. Evaluate AI capabilities and identify strengths and gaps. Use this information to guide the strategic planning, baseline current capabilities, increase maturity and scalability, and establish a road map for improvement.”

With Infotech’s help, DFO decided on the following dimensions for assessment:

- AI Governance: Do we have enterprise-wide long-term strategy and clear alignment with what is required to accomplish it?

- Data Management: Do we have a data-centric culture that shares data across the enterprise?

- Talent: Do we have skilled people in place to deliver AI applications and the recruitment mechanisms to support this expertise?

- Process: Do we have processes and supporting tools to deliver on AI expectations?

- Technology: Do we have the required data and technology infrastructure to support AI-driven transformation?

Elhabyan and her team proceeded to run the AI governance maturity testing.

AI Maturity Assessment Results

Elhabyan received the following AI maturity assessment results:

The blue markings revealed DFO’s current state across all dimensions, while the green represented their strategic target. DFO exposed minimal data management capabilities in the five areas it assessed. Now, DFO could figure out what governance components to add.

Building Out Governance Structures to Support AI

With the maturity assessment clearly revealing critical gaps in the data governance framework, Elhabyan adopted a pragmatic approach. She said:

“Let’s start with baby steps and use what we have now. We will take the use cases that we developed with unclassified data, and work with IT to take it into production with AI.”

DFO strategically selected data quality use cases as viable for AI model development. These examples provided an ideal starting point because they utilized highly dimensional but non-sensitive data, reducing compliance complexity while still offering significant value.

Simultaneously, DFO started building out AI governance components, including:

Roles and Responsibilities: Elhabyan created a matrix of roles and responsibilities needed for the entire life cycle of the AI model. For example, she lays out the duties of the chief data steward, who became responsible for developing an AI literacy program.

Risk Management with Responsible and Ethical AI: DFO started putting together responsible AI guiding principles. They operationalized them through playbooks and a self-assessment questionnaire.

AI Governance Operating Model: This step required using the outputs from the prior components to build an AI governance operating model. Also, DFO would need to build an intake process to align AI projects with governance.

Continuous Improvement: Elhabyan expected the AI governance model to evolve as understanding increased and improved maturity. To do so DFO would use an AI self-assessment framework and questionnaire to guide improvements.

While recognizing the need for additional components, such as production risk monitoring and a responsible AI center of expertise, DOF recognized it was well underway to having the governance foundations it needed.

Conclusion

Like many organizations, DFO planned on implementing AI to save money and operate more effectively. Additionally, it needed to align with a comprehensive strategy for Canada to balance enhanced citizen services with responsible AI implementation.

Elhabyan faced the challenge of translating this high-level strategy into operational AI models. After establishing a clear definition of AI governance with her team, DFO initiated several use cases through their innovation pipeline. These projects stalled before reaching production.

Collaboration became essential at this stage. Elhabyan initiated conversations with several stakeholders, resetting their expectations and aligning them on a valuable maturity assessment.

The structured maturity assessment, while revealing disappointing initial scores, provided directions on which governance components to build and how. Elhabyan and her team established multiple governance structures with viable AI models under development. While they still had more work ahead, DFO was in a much stronger position to bring AI models into production with minimal issues, demonstrating how laying the foundations for AI governance and assessing capabilities leads to practical implementation.

____

Want to learn more about DATAVERSITY’s upcoming events? Check out our current lineup of online and face-to-face conferences here.