Click to learn more about author Arsalan Farooq.

I do not think it is an exaggeration to say data analytics has come into its own over the past decade or so. What started out as an attempt to extract business insights from transactional data in the ’90s and early 2000s has now transformed into an existentially critical function within most companies. Beyond just the traditional OLTP/OLAP use cases, there are now petabyte datastores, streaming analytics, Data Science, AI and machine learning, and so on.

At the same time, there has been an explosion in new tooling, technology, architectures, and design principles to support these new use cases: “big data” batch processing, columnar databases, key-value stores, cloud data warehouses, data lakes, data pipelining, and Lambda architectures, to name a few.

In this relatively dense and mature landscape, an exciting new concept has recently emerged. Proposed formally earlier this year by Armbrust, Ghodsi, Xin, and Zaharia (Databricks, UC Berkeley and Stanford University), the Lakehouse Architecture seeks to unify the hitherto disparate domains of classic OLAP/warehouses and streaming, real-time, advanced analytics. If realized as envisioned, this would be a big deal for data analytics.

To understand why, let us take a step back and look at how we arrived where we are today.

The Intrinsic Duality in Data Architecture

Whenever we want to store data in order to retrieve it later, we are faced with a fundamental choice: Should we optimize the design for write performance or read performance? This “duality” is intrinsic to all data storage schemes, including RDBMSs, filesystems, block storage, object storage, and so on.

While we have built increasingly complex data storage schemes, technologies, and architectures over time, this basic duality remains intact. OLTP vs. OLAP schemas, narrow vs. wide tables, columnar vs. row stores, ETL vs. ELT, schema-on-write vs. schema-on-read, etc., all trace back to this fundamental choice.

In the mid-2000s, I was at Oracle and we were struggling with finding the right compromise in the face of a rapidly expanding set of customer use cases. We had top-tier OLTP (write-centric) performance but were already seeing an explosion of OLAP (read-centric) data volumes for BI/EDSS use cases. Customers expected high OLAP performance just like they were getting from their OLTP workloads. I recall torturous discussions around star schemas, narrow vs. wide tables, cardinality vs. dimensionality, and so on. Owing to the fundamental difficulty in balancing write- vs. read-centric designs, I had half-jokingly taken to calling this duality the Heisenberg Principle of Data.

Rise of the Cloud Data Warehouses (CDWs)

There is a straight line to be drawn between those OLAP/warehouse efforts of a decade and half ago and today’s CDWs. The BI/EDSS/analytics primary use case for warehouses has not changed. Data volumes, on the other hand, have.

Data warehouse design using a traditional RDBMS is typically confined to a relational structure, a row-oriented store, and is additionally constrained by on-premises compute and storage. Even with clever schema design, this limits the scale and performance the system can deliver.

The advent of cloud has removed most, if not all, of these constraints. CDWs can access almost unlimited compute, so they can scale using bespoke MPP schemes. They are not limited by storage size or type. Most are free to use analytics-friendly columnar stores on custom storage backends. This, in turn, lends well to partitioning, compression, and other clever schemes.

The end result is a fully managed BI/analytics offering virtually unconstrained by data volume and able to deliver unprecedented query performance. What is not to love?

Unstructured Data and the Emergence of Data Lakes

It so happens the great data explosion of the early 21st century hasn’t been confined to just traditional data sources. There has been a massive proliferation of new categories of dat – web data, ad data, instrumentation data, behavioral data, and IoT data, to name a few.

Data Science (AI/ML models), real-time streaming, and predictive analytics are the primary consumers of these new data categories. They have rapidly gained importance within the enterprise to where they now rival traditional BI/analytics in business value.

As it turns out, CDWs are not best suited to these new ultra-high-volume, high-velocity, lower-quality, and often semi- or unstructured data sources. CDWs are schema-on-write systems necessitating clunky ETL processes during ingestion that increase complexity and limit velocity. They also rely on periodic batch uploads, which introduce too much latency for real-time streaming analytics. Finally, their cost structure is ill-suited to massive amounts of data, most of which may never be usable or used. Despite concerted attempts to adapt CDWs to these new use cases (small/mini batching, “external” tables, etc.), the fundamental performance, complexity, and cost issues keep CDWs an imperfect solution at best.

To meet the need, we have seen new architectures emerge that favor zero-friction ingest into cheap commodity storage and leave expensive, complex tasks like data cleaning, validation, and schema enforcement to later. These are the data lakes.

Source: Data Bricks

Data lakes are typically constructed using open-storage formats (e.g., parquet, ORC, avro), on commodity storage (e.g., S3, GCS, ADLS) allowing for maximum flexibility at minimum costs. Data validation and transformation happens only when data is retrieved for use. Since there are no barriers to ingestion volume or velocity, and validation/ETL can be performed on a per-need basis, data lakes have proven well-suited for ingesting large quantities of potentially low-quality, unstructured data for selective consumption by Data Science and real-time, streaming analytics applications.

This has come at a cost, however. Most enterprises quickly found themselves maintaining two parallel verticals for their Data Architecture: a query performance optimized (read-centric), structured-data, CDW pipeline running right alongside an ingest-optimized (write-centric) data lake and processing vertical for Data Science and streaming analytics. The duality continues to plague us.

The Lambda Architecture: “If You Can’t Beat ’em, Join ’em at the Hip”

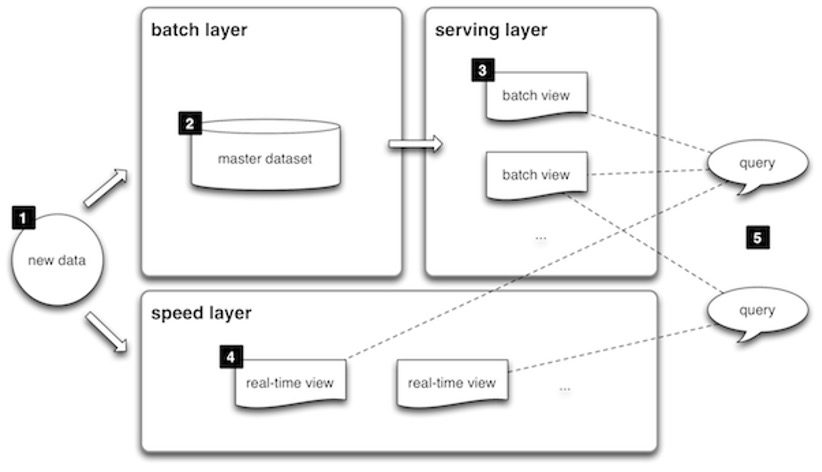

Unsurprisingly, it was not long before data architects started looking for a way to mitigate the cost and complexity in maintaining two parallel architectures. This led to the Lambda Architecture.

Source: Lambda Architecture

In a Lambda Architecture, there are still two parallel pipelines for batch and stream processing present, but the ingest and consumption (API) sides are consolidated and rationalized. In this way, BI/analytics as well as streaming analytics applications are presented a common interface. Conversely, any ingestion-side duplication is also mitigated.

While this scheme does rationalize some shared attributes of batch and stream processing pipelines, and arguably does confer a few additional benefits such as fault-tolerance and query efficiencies, it has proven to be complex, costly, and difficult to maintain in its own right. Keeping the two pipelines in sync, data duplication, handling errors, data integrity issues, and so on have often proven non-trivial.

At its best, the Lambda Architecture aims to reclaim some architectural efficiencies by rationalizing commonalities between the batch and real-time pipelines. It does not, however, address the duality. A grand unification this is not.

The Lakehouse

The suggestive data lake and data warehouse portmanteau, “Lakehouse” evokes a true merger of its two constituent elements. Indeed, the idea is that, given the compute and storage separation afforded by today’s cloud environments, it is now possible to combine the warehouse and lake schemes into a single, unified architecture: the Lakehouse.

In a Lakehouse, structured, semi-structured, and unstructured data are all stored on commodity storage in direct-access, open-source file formats. However, a layer providing a traditional “table” abstraction and capable of performing ACID transactions sits atop this base object store.

Source: CIDR

Classical RDBMS features such as SQL-based-access, ACID transactions, query-optimization, indexing, and caching are available through this layer to traditional BI/analytics applications. At the same time, Data Science/ML/streaming analytics use cases retain direct access to raw data. Meanwhile, data ingestion remains a zero-friction, low-cost prospect given commodity storage and open formats.

The implications are staggering. The Lakehouse covers the entire range of use cases, from BI/EDSS/analytics to Data Science/ML/streaming analytics in one, coherent architecture. No more data lake/warehouse distinction, no more BI/ML separation, no more data duplication, no more clunky parallel pipelines in Lambda Architectures.

So, Fait Accompli Then … Unification at Last?

No, not quite yet. The devil will be in the details.

While recent efforts such as the Delta Lake project and Databricks’ Delta Engine demonstrate viability for key elements of a Lakehouse Architecture, these implementations are far from being done or complete, and significant questions still remain.

For instance, can the managed table abstraction and its implementations meet performance expectations at scale? Can critical features such as governance, fine-grained access controls, and data integrity be delivered while maintaining performance and architectural compacts? What about sophisticated SQL and DML features data-warehousing veterans rely on and will not switch over without? It is too early to draw a definitive conclusion and render a verdict.

What is not in doubt is that we are going to see an arms race between vendors scrambling to bring a Lakehouse offering to market. The value proposition and market implications of a unified data analytics platform are simply too significant to miss out on. Databricks’ Delta Engine, AWS Lake Formation, and Microsoft’s Azure Synapse are already touting unified analytics. Others are surely soon to follow.

Despite all the unanswered questions and legitimate concerns, the Lakehouse is a promising step forward in the Data Architecture. It is quite possible we are witnessing a tectonic plate shift in the data analytics landscape.

One thing is for certain, I have not been this excited about Data Architecture since back when I was arguing “long-skinny” tables vs. “full-fat” tables more than a decade ago.