Discussing the rights and wrongs of artificial intelligence (AI) is more urgent than ever before – and more difficult. In the first of a series of articles, I will propose ways to better structure the debate about AI ethics.

From online chatbots to automated warehousing and disease diagnosis, AI is at work in more and more areas of everyday life. As a result, the question of what we should – and should not – use AI for is an increasingly urgent one for ever more people. But discussions about AI ethics often lead nowhere, as people end up talking past one another: I might want to know whether using AI in a particular field will make good things happen, while you might be focusing on whether the processes and people designing it are reliable or their intentions good.

Finding answers to a growing number of ethical questions seems harder than ever. People with different backgrounds, experiences, and expertise hone in on different ethical aspects and approach issues in different ways: Do we need self-driving cars? Can self-driving cars ever be as safe as human-driven cars? What if – by accident or someone’s design – self-driving cars turn into urban prowlers or surveillance machines? Discussing all these issues at once is a sure path to confusion. We need to bring more structure to our debates about AI ethics to finally get some practical answers.

Issues relating to self-driving cars have been discussed for years. But as classic (or clichéd) the topic might be, there are still open questions about the wisdom of pursuing the technology. When a Tesla with drive-assist features crashes, it has an extremely high chance of making the news. To me, this is a sure sign that we’re still grappling with the question of whether we can make self-driving cars safe. But it is also a sure sign that many people have already dealt with another question: whether we actually need self-driving cars. The answer, largely, is, yes, self-driving cars are not a bad idea – they don’t drink and they don’t fall asleep, for one.

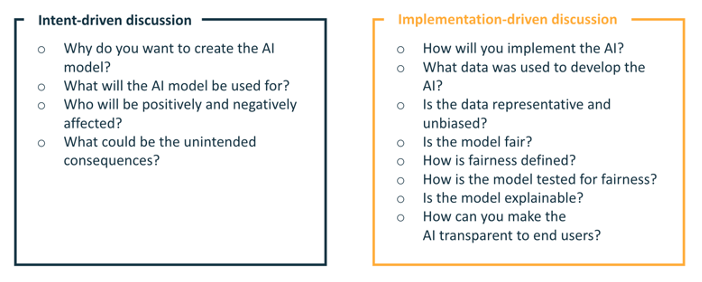

So, two things we should talk about when we talk about AI ethics are intent and implementation. There are other useful conversations, and I’ll get to those in subsequent pieces. But intent and implementation is an excellent pair to start with. Intent focuses on what we should use AI for, implementation on how we should use it. Structuring our debates along those lines allows us to conclude that self-driving cars “only” pose ethical problems in relation to the how, not the what (which is not to underplay the decision-making dilemmas of safe driving, as shown by simulators like moralmachine.net).

On the other hand, a discussion of the ethics of AI-driven art is usually begun by looking at the what, not the how. Take the recent “completion” by AI of Beethoven’s Tenth Symphony by an algorithm. What purpose does this use of algorithms serve? Does it actually help our understanding of Beethoven, as claimed by the music and AI experts responsible for the project? Or is AI being misused for marketing a music festival? Only if the “ethics of intent” are deemed acceptable should we move on to the “ethics of implementation.” If intent is held to be unacceptable, implementation becomes irrelevant.

The distinction between intent and implementation is extremely useful in laying the grounds for debate. AI-driven war? I think we’d all agree on starting with intent and not getting caught up in the how straightaway. AI-designed vaccines? Not a bad idea, but we have to look at the implementation to make sure that, for example, the algorithms doing the work are bias-free. Facial recognition? Bad if used to spy on people, but good for unlocking smartphones – so let’s skip to discussing implementation, not intent. AI-driven recruitment? I’m really not sure about this one, so perhaps best to start with the what.

As I hope you can see, this approach will help us ask the right questions, but not make finding an answer any easier. We might even first have to debate what to debate – intent or implementation. But a discussion about the structure of a debate leads to better results than an unstructured debate. In future articles, I’ll also be looking at AI ethics through the distinctions of opportunity vs. risk and AI as tool vs. AI as agent, before taking stock of the present and the future. Of course, my framings are neither definitive nor exhaustive. But they can help us focus on the right set of questions – and maybe turn up many new ones.