DATE: December 2, 2020, This event has passed. The recordings will be published On Demand within one week of the live event.

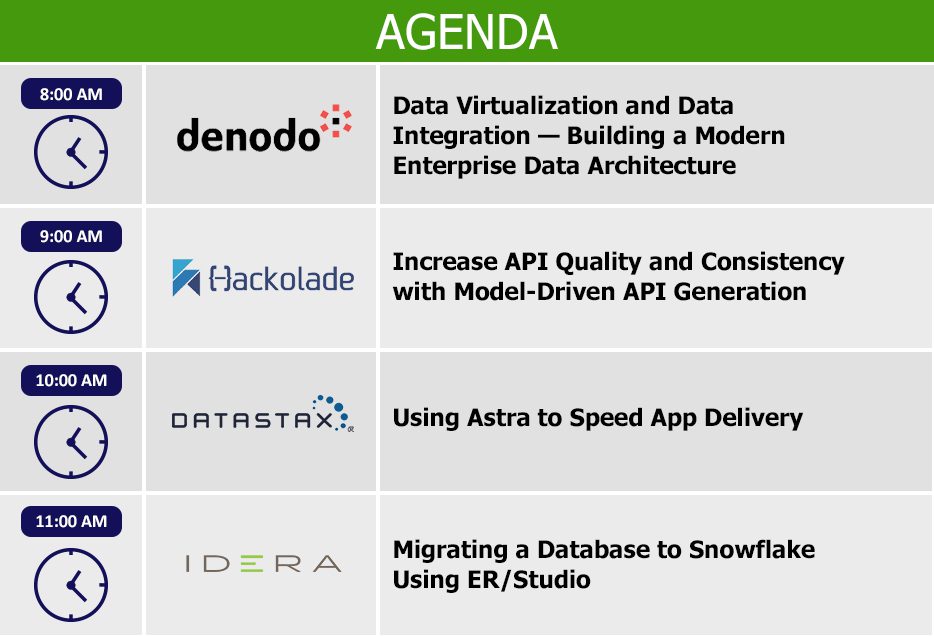

TIME: 8:00 AM – 11:45 AM Pacific / 11:00 AM – 2:45 PM Eastern

PRICE: Free to all attendees

Welcome to DATAVERSITY’s newest education opportunity, Demo Day, an online exhibit hall. We’ve had many requests from our data-driven community for a vendor-driven online event that gives you an opportunity to learn more about the available tools and services directly from the vendors that could contribute to your Data Management program success.

Register to attend one or all of the sessions or to receive the follow-up email with links to the slides and recordings.

Session 1: Data Virtualization and Data Integration – Building a Modern Enterprise Data Architecture

Gartner projects that by 2022, 60% of all organizations will implement data virtualization as one key delivery style in their data integration architecture. Denodo was recently named a Leader in the 2020 Gartner Magic Quadrant for Data Integration Tools and named the Best Data Virtualization Solution in DBTA’s 2020 Readers’ Choice Awards. Data virtualization provides a logical data layer that integrates all enterprise data siloed across disparate systems both on-premise and in the cloud, manages the unified data for centralized security and governance, and delivers it to business users in real-time.

Attend this session to learn and see demonstrated:

- What data virtualization really is and how it works

- How it differs from other enterprise data integration technologies

- How you build and access the data virtualization layer to support a modern Data Architecture

Dave Chiou

Senior Solutions Engineer, Denodo

Dave is a Senior Solutions Engineer at Denodo, a leading data virtualization company. He has over 25 years of experience working with customers on data virtualization, Data Governance, data warehouse, big data, and analytics projects. Dave has spoken at several user groups and regional DAMA meetings. Prior to joining Denodo, he worked at IBM supporting many Fortune 100 customers and was an IBM Redbook author on Data Warehousing. He holds an MBA and a Bachelor’s degree in Electrical Engineering from the University of Illinois.

Session 2: Increase API Quality and Consistency with Model-Driven API Generation

According to Gartner, APIs are the basis for every digital strategy. With application architectures becoming increasingly distributed and modular, and with the proliferation of microservices, it is critical to ensure the quality and consistency of APIs.

In this session, you’ll see how NoSQL schema designs based on access patterns can be converted into full API specifications with a few mouse clicks, to serve as a basis for API-driven development. A customizable API template lets users control the output configuration and create APIs that customers and developers will love.

Pascal Desmarets

Founder and CEO, Hackolade

Pascal Desmarets leads Hackolade and all efforts involving business strategy, product innovation, and customer relations, as it focuses on producing user-friendly, powerful visual tools to smooth the onboarding of NoSQL technology in enterprise IT landscapes.

Hackolade is the pioneer for Data Modeling of NoSQL databases and JSON in RDBMS, providing a comprehensive suite of Data Modeling tools for various NoSQL databases and APIs. Hackolade is the only Data Modeling tool for MongoDB, Neo4j, Cassandra, ArangoDB, Couchbase, Cosmos DB, DynamoDB, Elasticsearch, EventBridge Schema Registry, Glue Data Catalog, HBase, Hive, MarkLogic, Snowflake, SQL Server, Synapse, TinkerPop, etc. It also applies its visual design to Avro, JSON Schema, Parquet, Swagger, and OpenAPI, and is rapidly adding new targets for its physical Data Modeling engine.

Session 3: Using Astra to Speed App Delivery

In today’s world, it is vital to quickly turn ideas into features. Keeping developers and data scientists on track and providing them the right workbench is a critical cog in the engine. This demo will show you how you can quickly spin up databases in DataStax Astra and what tools to use to quickly populate it with data.

Andrew Goade

Data Architect, DataStax

Andrew Goade is a Data Architect for DataStax, where he helps customers move to the next level in their data journey. With over 21-plus years in a variety of technical roles, including application development and infrastructure management, Andrew has a unique view of how to tackle the most difficult challenges with cutting edge, cost-effective technology. These experiences, plus his M.B.A. from Elmhurst College, allow Andrew to focus on the business drivers at hand and work with all levels of an organization to develop outcomes that positively affect the bottom line. Feel free to contact Andrew at andrew.goade@datastax.com or check him out at https://www.linkedin.com/in/andygoade/.

Session 4: Migrating a Database to Snowflake Using ER/Studio

As cloud services gain in popularity, Snowflake has grown into one of the premier platforms for data warehouses in the cloud. Data professionals who design and manage Snowflake data warehouses can use ER/Studio to capture organizational requirements, translate requirements into design, and deploy the design to Snowflake.

Join IDERA for a demonstration on how ER/Studio’s comprehensive support for Snowflake provides better visibility and easier updates to data structures. This includes:

- Forward and reverse engineering with data definition language (DDL) code

- Compare and merge capabilities with ALTER script generation

- Access to tables, views, columns, and constraints

- Table persistence and clustering keys

- Support for Snowflake features: Procedures, functions, materialized views, sequences, users, roles, and schemas

Arthur Lindow

Senior Solutions Architect, IDERA

Arthur Lindow is a Senior Solutions Architect at IDERA Software for ER/Studio Data Modeling Tools. Arthur is a Data Professional with 15-plus years of experience with the processes of converting, migrating, storing, analyzing, and reporting data. He has extensive experience with relational database design and modeling, stored procedures, user-defined functions, and views.